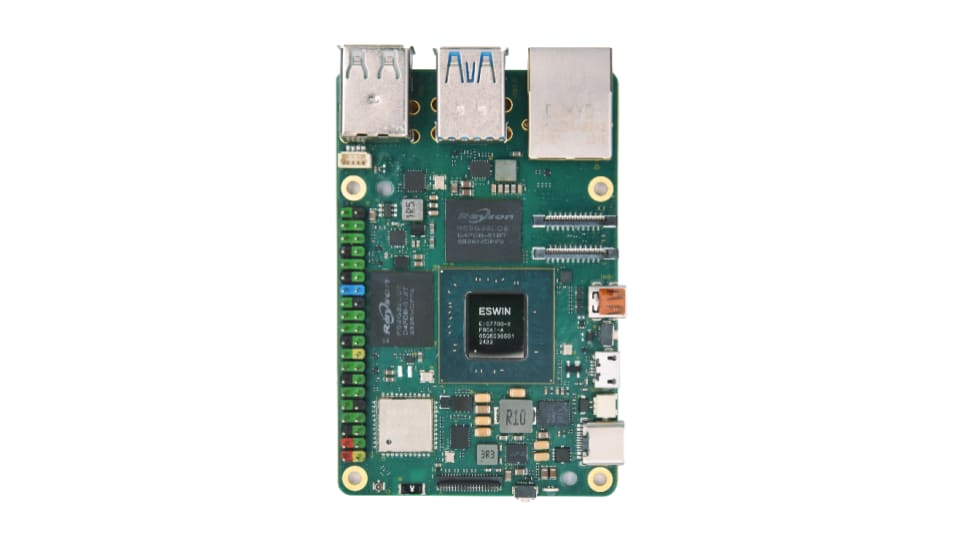

ESWIN Computing partners with Canonical to unveil a low cost, performant RISC-V SBC with Ubuntu as the preferred operating system

We are excited to announce that ESWIN Computing, in collaboration with Canonical, is bringing Ubuntu 24.04 LTS to the ESWIN Computing EBC77 Series Single Board Computer (SBC for short). The EBC77 is a cutting-edge platform for educational applications, embedded and edge systems, and general purpose applications. This partnership empowers developers using the ESWIN Computing EBC77 Series SBC to use Ubuntu’s powerful ecosystem, facilitating smooth integration with the wider open source community and driving accelerated innovation in RISC-V software development.

An advanced RISC-V Based Single Board Computer

The ESWIN Computing EBC77 Series SBC offers impressive performance featuring 64-bit Out-of-Order(OoO) RISC-V CPU and self-developed NPU, LPDDR5 memory running at 6400Mbps high-speed and stable user experience for web surfing, and rich expansion interfaces. This makes for a powerful general purpose platform for various embedded use cases.

The board features include:

- ESWIN Computing EIC7700X SoC

- RISC-V 4-core processors with frequency up to 1.8GHz

- Self-developed NPU with AI computing power up to 20TOPS

- On-board 64-bit LPDDR5 @ 6400Mbps

- 1x 8MB SPI NOR Flash

- 1x 4-lane PCIe GEN3 FPC slot

- 2x USB3.2 GEN1

- 2x USB2.0

- 1x Micro HDMI Out

- 1x 4-lane MIPI DSI TX or 4-lane MIPI CSI RX

- 1x 4-lane MIPI CSI RX

- 1x Gigabit Ethernet RJ45 connector

- 1x Micro SD Card slot

- 1x On-board 802.11ac Dual band Wi-Fi module

- I2C, I2S, UART, General I/O ports (map on 40-pin header)

ESWIN Computing and Canonical: empowering innovation on RISC-V platforms

Building on the successful joint demonstration of DeepSeek by ESWIN Computing and Canonical at the RISC-V Summit Paris, Canonical is reaffirming its goal to expand Ubuntu enablement across RISC-V platforms.

ESWIN Computing’s launch of the EBC77 Series SBC with Ubuntu as the primary Linux distribution underscores Ubuntu’s position as the operating system of choice for innovators and developers. Together, ESWIN Computing and Canonical aim to accelerate innovation in the RISC-V ecosystem.

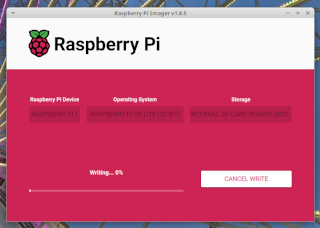

Experience the power of the EBC77 Series SBC with Ubuntu by downloading the image and following the installation guide on this github link to get started on the platform.

“ESWIN Computing’s launch of the EBC77 Series SBC integrates our strengths in high-end product design and computing power optimization with Canonical’s expertise in the Ubuntu OS and cloud-native technologies, jointly delivering more stable, flexible, and high-performance hardware-software solutions for diverse scenarios. We look forward to collaborating with Canonical to explore technological innovations, create greater value for industry partners and developer communities, and step into the new era of intelligent computing together!” – Haibo Lu, the General Manager of Intelligence Business Unit of ESWIN Computing.

“The launch of ESWIN Computing’s EBC77 Series SBC represents a significant advancement for the open-source community, empowering everyone from seasoned developers to newcomers to innovate on the RISC-V architecture. This highlights both the adaptability and robustness of Ubuntu, and underscores Canonical’s deep commitment to providing the best software environment for developers in the RISC-V ecosystem. Our collaboration with ESWIN Computing is an example of how we can make open standards and shared innovation truly thrive” says Jonathan Mok, Silicon Alliances Ecosystem Development Manager at Canonical.

The EBC77 Series SBC will make its debut at the RISC-V Summit China 2025 starting on July 17, 2025. Visitors to Shanghai will be able to experience the platform first hand at ESWIN Computing and Canonical’s booth.

Pre-orders for the EBC77 Series SBC will open soon. If you’d like to get your hands on one, please visit the ESWIN Amazon online store or the Taobao store for the latest updates.

Are you using RISC-V in your project?

Canonical partners with silicon vendors, board manufacturers, and leading enterprises to shorten time-to-market. If you are deploying Ubuntu on RISC-V platforms and want access to ongoing bug fixes and security maintenance or if you wish to learn more about our solutions for custom board enablement and application development services, please reach out to Canonical.

If you have any questions about the platform or would like information about our certification program, contact us.

Join the community

We believe collaboration and community support drive innovation and we invite you to join the Ubuntu and ESWIN Computing communities to share your experiences, ask questions, and help shape the future of RISC-V.

About Canonical

Canonical, the publisher of Ubuntu, provides open source security, support and services. Our portfolio covers critical systems, from the smallest devices to the largest clouds, from the kernel to containers, from databases to AI. With customers that include top tech brands, emerging startups, governments and home users, Canonical delivers trusted open source for everyone. Learn more at https://canonical.com/.

About ESWIN Computing

ESWIN Computing is a provider of intelligent solutions in the AI era. Focusing on smart devices and embodied intelligence as our two core application scenarios, ESWIN Computing is adopting next-generation RISC-V computing architecture, innovating domain-specific algorithms and IP modules, and constructing efficient and open software-hardware platforms to deliver highly competitive system-level solutions for customers worldwide. Learn more at www.eswincomputing.com.

Simple command-line interface

Simple command-line interface Secure API key and model management with

Secure API key and model management with  Clean output (only the AI response, no JSON clutter)

Clean output (only the AI response, no JSON clutter) Natural language queries

Natural language queries Uses free Mistral model by default

Uses free Mistral model by default Stateless design – each query is independent (no conversation history)

Stateless design – each query is independent (no conversation history)

KDE Mascot

KDE Mascot

What You’ll Need

What You’ll Need Step 1: Prepare the Raspberry Pi

Step 1: Prepare the Raspberry Pi Step 2: Install Plex Media Server

Step 2: Install Plex Media Server Step 3: Enable and Start the Service

Step 3: Enable and Start the Service Step 4: Access Plex Web Interface

Step 4: Access Plex Web Interface Step 5: Add Your Media Library

Step 5: Add Your Media Library Optional Tips

Optional Tips Secure Your Server

Secure Your Server Conclusion

Conclusion What is 0 A.D.?

What is 0 A.D.? Historically accurate civilizations

Historically accurate civilizations Dynamic and random map generation

Dynamic and random map generation Tactical land and naval combat

Tactical land and naval combat City-building with tech progression

City-building with tech progression AI opponents and multiplayer support

AI opponents and multiplayer support Modding tools and community-created content

Modding tools and community-created content Why It’s Perfect for Linux Users

Why It’s Perfect for Linux Users Native Linux Support

Native Linux Support Vulkan Renderer and FSR Support

Vulkan Renderer and FSR Support Rolling Updates and Dev Engagement

Rolling Updates and Dev Engagement What Makes the Gameplay So Good?

What Makes the Gameplay So Good? Multiplayer and Replays

Multiplayer and Replays Multiplayer save and resume support

Multiplayer save and resume support Observer tools (with flares, commands, and overlays)

Observer tools (with flares, commands, and overlays) Replay functionality to study your tactics or cast tournaments

Replay functionality to study your tactics or cast tournaments Community and Contribution

Community and Contribution How to Install on Linux

How to Install on Linux Final Thoughts

Final Thoughts Visit

Visit

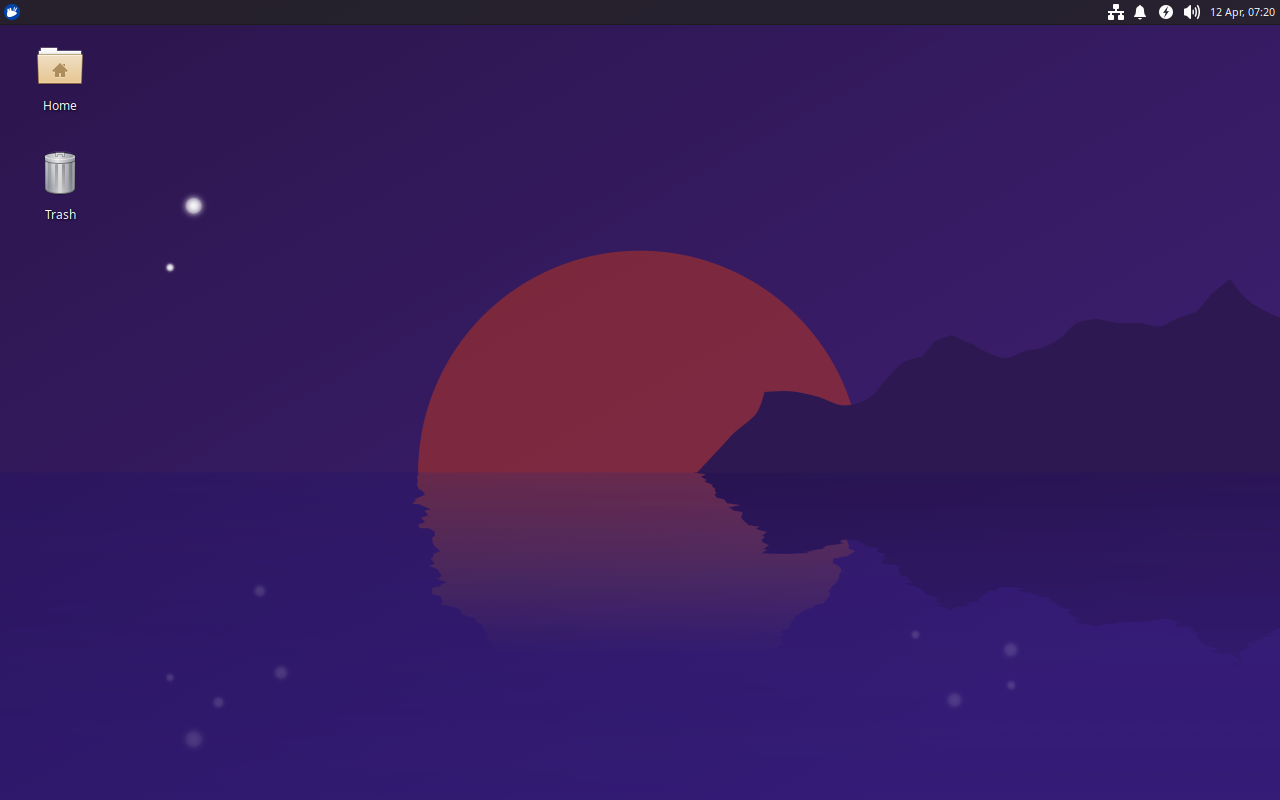

Xubuntu 25.04, featuring the latest updates from Xfce 4.20 and GNOME 48.

Xubuntu 25.04, featuring the latest updates from Xfce 4.20 and GNOME 48.

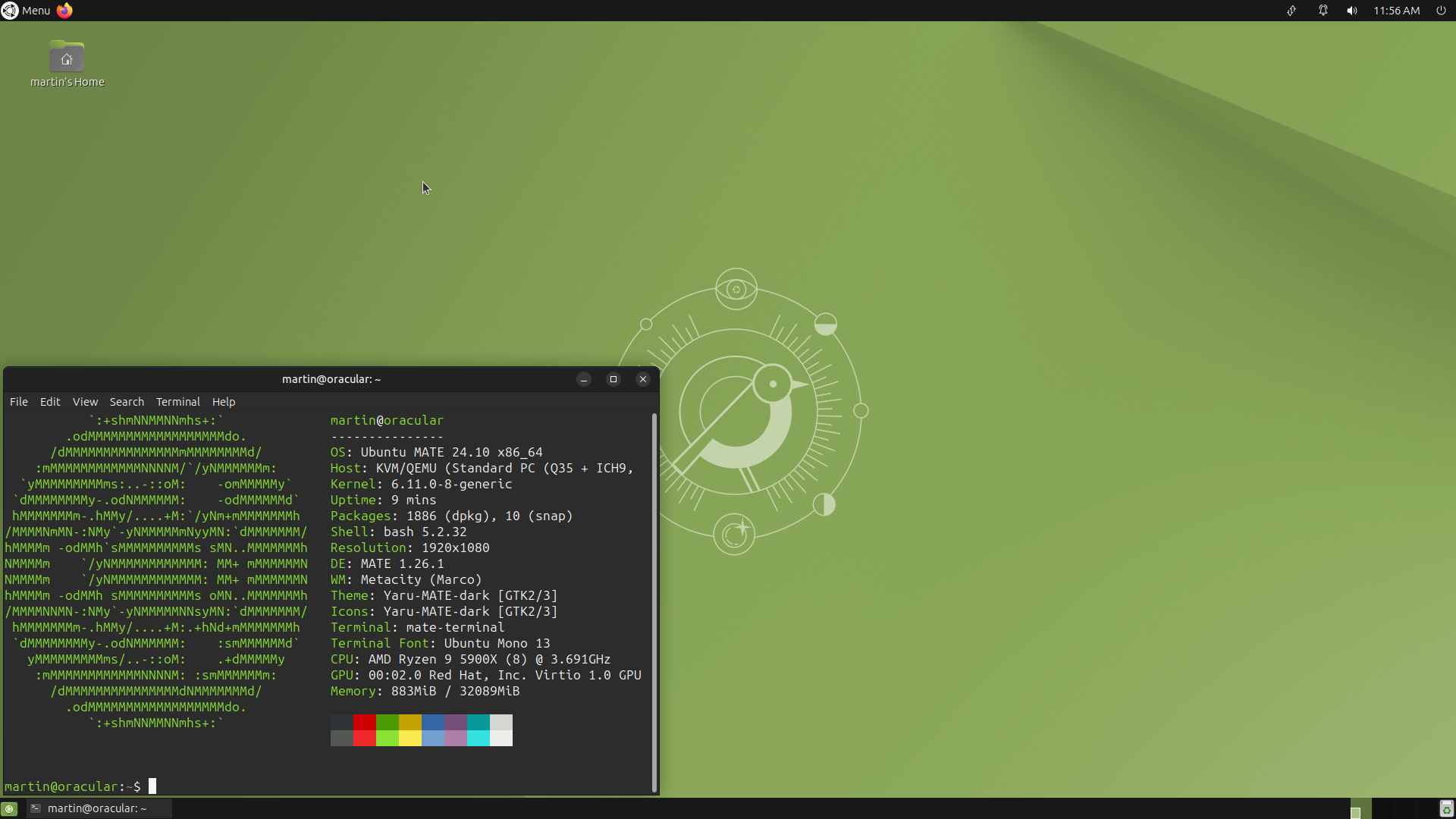

Ubuntu MATE 24.10

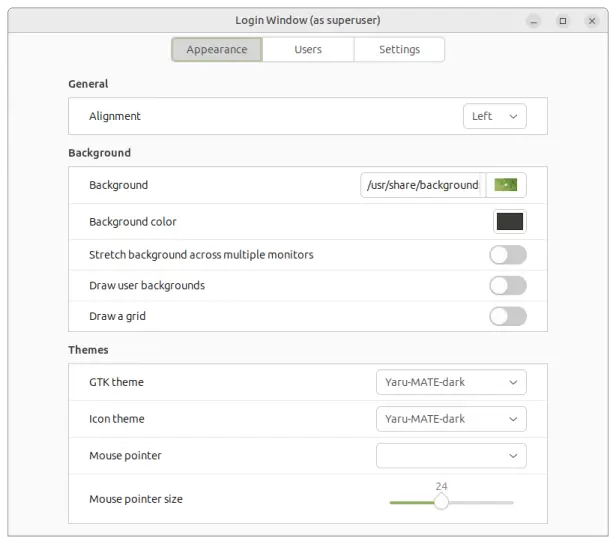

Ubuntu MATE 24.10 Login Window

Login Window