In the world of enterprise IT, “support” can mean many things. For some, it’s a safety net – insurance for the day something breaks. For others, it’s the difference between a minor hiccup and a full-scale outage. At Canonical, it means a simple, comprehensive subscription that takes care of everything, so that everything you build works the way you want it to, for all the people who love to use it.

This post demystifies what “support” means in the context of Linux support, how it differs from security maintenance, and why both are essential for modern organizations. Through real-world examples and deep dives into Canonical’s support processes, you’ll discover how Ubuntu Pro + Support empowers teams to build, scale, and secure their infrastructure with confidence.

Linux support vs security maintenance: why both matter

To begin, we’ll briefly disambiguate two related, but distinct, concepts: security maintenance and support.

We’ll use the example of Ubuntu Pro + Support to illustrate it.

- Security maintenance: Up to 12 years of security patches for over 36,000 packages, on top of kernel livepatching and compliance tooling (FIPS, CIS, DISA-STIG). This is your proactive defense, keeping systems resilient and compliant. This is the “Ubuntu Pro” part of Ubuntu Pro + Support.

- Break-fix and bug-fix support: When something goes wrong – whether it’s a sudden outage, a failed upgrade, or a mysterious performance drop – our engineers are there to help you troubleshoot, resolve the issue and recover. This is the “Support” part of Ubuntu Pro + Support.

Security maintenance keeps you safe. Linux support gets you back up and running, fast. These two concepts reinforce each other, but serve different purposes.

Read more about the difference between Linux Support and Security Maintenance >

What does Ubuntu Pro + Support actually do?

The last line of defense – and the first to respond

Canonical’s support team is more than a help desk. We’re the people who make and maintain the software you’re running. When you hit a wall – whether it’s a Sev 1 outage, a subtle configuration bug, or a device-specific problem – we’re always there when you need a solution. Our expertise spans every layer of the stack, from the kernel to Kubernetes, Ceph to OpenStack, from cloud and applications like PostgreSQL to Ubuntu Core and snaps.

Our team’s value lies in combining deep technical Linux and Canonical products expertise with creative problem solving and cross-team collaboration.

Let’s zoom in on a real-world example to see what this looks like in action.

What Linux support looks like in practice

A customer (a global SaaS provider) contacted the support team due to problems they were experiencing with a self-implemented Landscape instance in conjunction with FIPS. Landscape is Canonical’s systems management tools for device fleets, and this customer was migrating to Ubuntu for cost savings and strategic alignment they needed to implement FIPS-certified cryptography at scale, using Landscape to do so.

However, as they scaled their deployment to around a thousand clients on their AWS-hosted Landscape instance, they began encountering overloads regardless of instance sizing, leading to service collapses and restarts.

Canonical’s support team, including their Technical Account Manager, led a deep investigation. Working across product and engineering, we identified that the FIPS changes introduced additional overhead and random failures in Landscape. Given that compliance obligations to NIST required strict adherence to FIPS standards, we couldn’t simply pause or remove the FIPS cryptography.

Instead, in a couple of days, our engineers helped the customer implement SSL termination outside of Landscape, offloading the cryptographic burden while maintaining compliance. This agile, collaborative solution allowed the customer to scale their SaaS platform on Ubuntu without having to rebuild their entire management stack. Our team upheld functionality, with no compromises on security.

Breadth of experience, depth of knowledge

Over the years, we’ve seen it all, and helped developers solve difficult, business-threatening, or simply irritating problems in their work and systems. We’ve already touched on an example related to compliance.

Let’s take a look at what else we do to help when things go wrong.

Protecting thousands of VMs from global outages

One customer, running a golden Ubuntu image – a standardized, pre-configured virtual machine template used to ensure consistency across large deployments –, hit a wall when a restart script failed across a fleet of thousands of VMs.

To stop the spread, our team quickly suspended their Ubuntu Pro tokens remotely to prevent the machines from trying to get ESM updates, which would trigger the restart script. The tokens were not the issue, however this action ensured that the script stayed confined in the machines it had initially affected. Our team then worked with them to diagnose the root cause, saving hours of potential downtime and guiding them toward better fleet management practices, to prevent actions that would trigger a repeat of the issue.

Eliminating Ceph cluster latency headaches

A customer running Ceph experienced high latency in their cluster. Our support team led a multi-day investigation, and concluded that the origin was a problematic OSD (Object Storage Daemon, the core component responsible for storing data on disk and providing access to it over the network). Ceph support cases are generally lengthy and convoluted, but this customer needed improvements in a matter of days, otherwise their business would be severely impacted. The support team went above and beyond providing step-by-step instructions to replace the OSD and rebalance the cluster, offering daily check-ins, delivering improvements within a couple of days, and ultimately supporting the implementation of a sustainable fix.

Taking on long-running performance issues in the cloud

When a major performance degradation emerged after the OpenStack 2024.1 (Caracal) release, the sustaining engineering team worked for months to identify the root cause in a complex OpenStack environment. Their persistence led to an upstream fix that resolved the issue for all users, not just the original customer who flagged the issue.

Deep-dive investigations that go beyond support

In one case, a customer escalated a network performance regression on AMD CPUs, believing there to be a software issue. The sustaining engineering team spent weeks analyzing kernel versions, performance counters, and lab setups, in order to identify the origin of the regression. Ultimately, they determined that the issue was hardware-related, not a software bug. The customer was so impressed they offered direct feedback, calling the support “superb” and asking how to recommend the engineer.

Collaborative bug fixes with industry impact

When a streaming service’s Ceph cluster suffered random restarts under bandwidth pressure, the sustaining engineering team worked hand-in-hand with both the customer and upstream communities. The fix not only stabilized the customer’s environment, but also earned external recognition, including a Google Open Source Peer Bonus for the Canonical engineer who contributed the patch.

Beyond break-fix: preventative and strategic Linux support

Support isn’t just for when things go wrong. It’s for when you’re doing something completely new, and need people with expertise on-hand to advise and troubleshoot issues.

Many customers open low-priority cases ahead of sensitive network changes or major upgrades. In this case, we can assign engineers with domain-specific expertise – like deep OVN knowledge for telecom customer maintenance windows – so we’re ready to respond instantly if an incident occurs. It’s not proactive monitoring, but it’s a smarter, more prepared way to handle risk.

For example, we often field dozens of questions from customers about their devices. By attending to these small issues with the correct information, direct from engineers, we avoid bigger problems before they even begin. We go deep too: onboarding checklists, infrastructure reviews, and ongoing support ensure you’re confident from day one. When you come to us with a case, we already know your environment and can dive straight into solving your problem. Whether it’s a question on security and compliance, a request for new features, or a complicated operational challenge, we’re here to hold your hand and provide clarity and peace of mind.

Sometimes, the solution isn’t in the docs. That’s when we go the extra mile: jumping on a targeted video call for rapid log collection, or collaborating with your team to design a workaround that fits your environment. For example, a customer once came to us looking to implement FIPS compliance for over a thousand clients on AWS. When SSL operations under FIPS began overloading their system, our engineers collaborated across product and support teams to devise a creative workaround: offloading SSL to a load balancer and applying a targeted hotfix. What could have taken months to resolve was fixed in a day, with minimal disruption.

We’ll guide you through upgrades and migrations according to best practices, but we don’t replace consulting or provide custom implementations. Clear boundaries mean you get the Linux support you expect – and nothing less.

Conclusion

Ubuntu Pro + Support is more than a break-fix hotline – it’s a partnership built on real experience, deep technical knowledge, and a commitment to helping you run open source infrastructure with confidence. Whether you’re facing a Sev 1 outage, a complex compliance challenge, or just need a sounding board for your next upgrade, Canonical’s support team is there – with the breadth, depth, and agility that modern enterprises demand.

With Ubuntu Pro + Support, you get more than answers. You get a partner in operational excellence, a bridge to upstream innovation, and a foundation for secure, resilient, and scalable Linux infrastructure.

Get in touch now to see how we can support your specific needs >

Simple command-line interface

Simple command-line interface Secure API key and model management with

Secure API key and model management with  Clean output (only the AI response, no JSON clutter)

Clean output (only the AI response, no JSON clutter) Natural language queries

Natural language queries Uses free Mistral model by default

Uses free Mistral model by default Stateless design – each query is independent (no conversation history)

Stateless design – each query is independent (no conversation history)

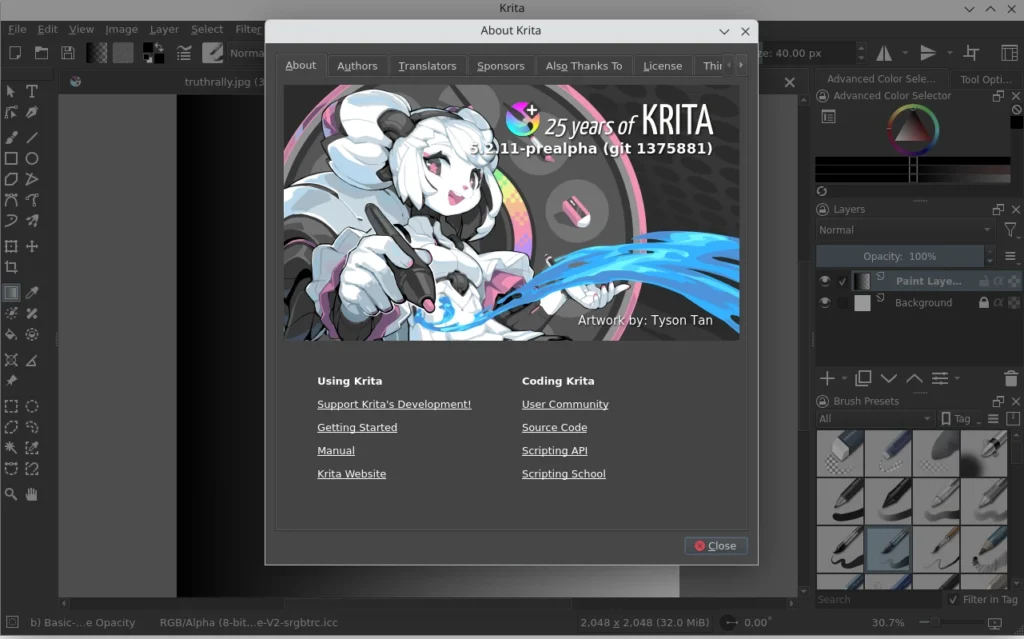

KDE Mascot

KDE Mascot

What You’ll Need

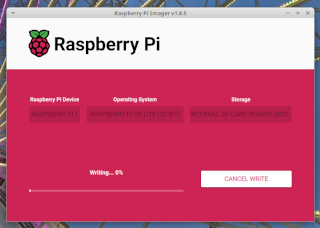

What You’ll Need Step 1: Prepare the Raspberry Pi

Step 1: Prepare the Raspberry Pi Step 2: Install Plex Media Server

Step 2: Install Plex Media Server Step 3: Enable and Start the Service

Step 3: Enable and Start the Service Step 4: Access Plex Web Interface

Step 4: Access Plex Web Interface Step 5: Add Your Media Library

Step 5: Add Your Media Library Optional Tips

Optional Tips Secure Your Server

Secure Your Server Conclusion

Conclusion What is 0 A.D.?

What is 0 A.D.? Historically accurate civilizations

Historically accurate civilizations Dynamic and random map generation

Dynamic and random map generation Tactical land and naval combat

Tactical land and naval combat City-building with tech progression

City-building with tech progression AI opponents and multiplayer support

AI opponents and multiplayer support Why It’s Perfect for Linux Users

Why It’s Perfect for Linux Users Native Linux Support

Native Linux Support Vulkan Renderer and FSR Support

Vulkan Renderer and FSR Support Rolling Updates and Dev Engagement

Rolling Updates and Dev Engagement What Makes the Gameplay So Good?

What Makes the Gameplay So Good? Multiplayer and Replays

Multiplayer and Replays Multiplayer save and resume support

Multiplayer save and resume support Observer tools (with flares, commands, and overlays)

Observer tools (with flares, commands, and overlays) Replay functionality to study your tactics or cast tournaments

Replay functionality to study your tactics or cast tournaments Community and Contribution

Community and Contribution How to Install on Linux

How to Install on Linux Visit

Visit

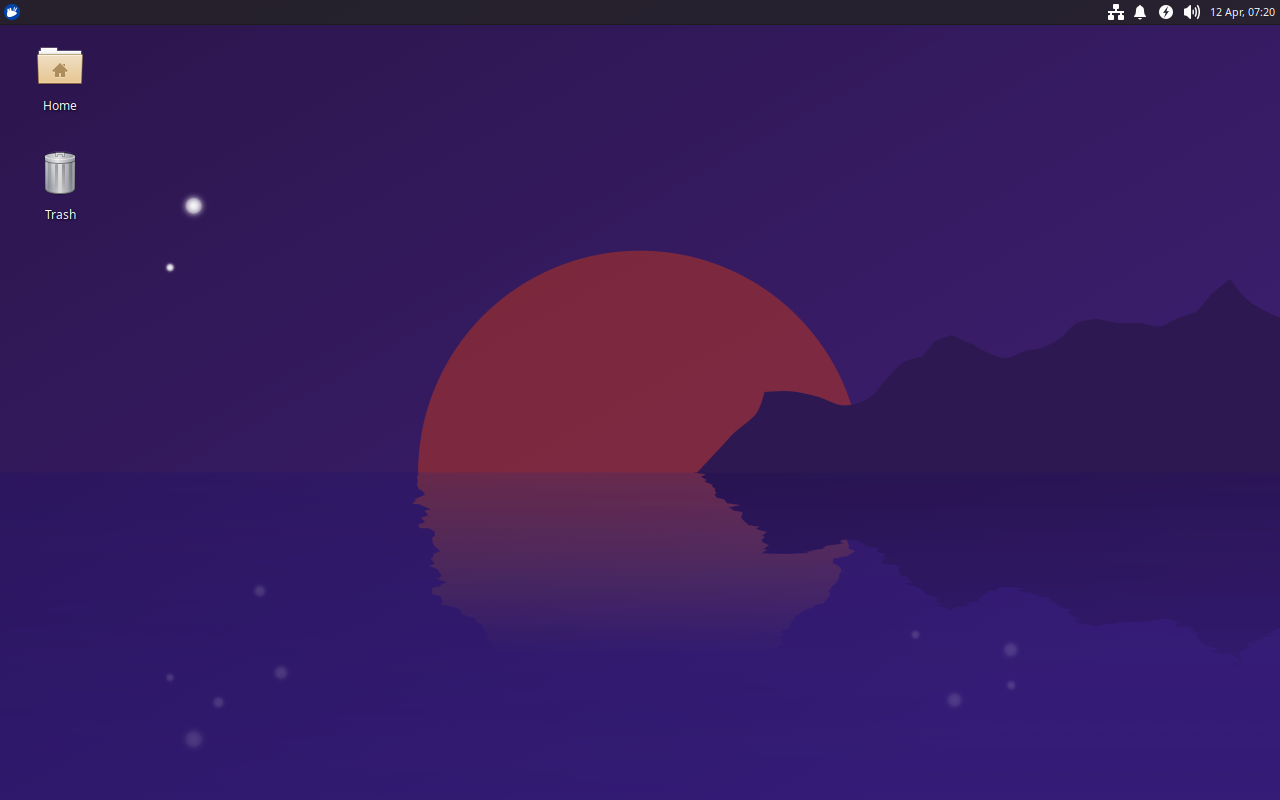

Xubuntu 25.04, featuring the latest updates from Xfce 4.20 and GNOME 48.

Xubuntu 25.04, featuring the latest updates from Xfce 4.20 and GNOME 48.

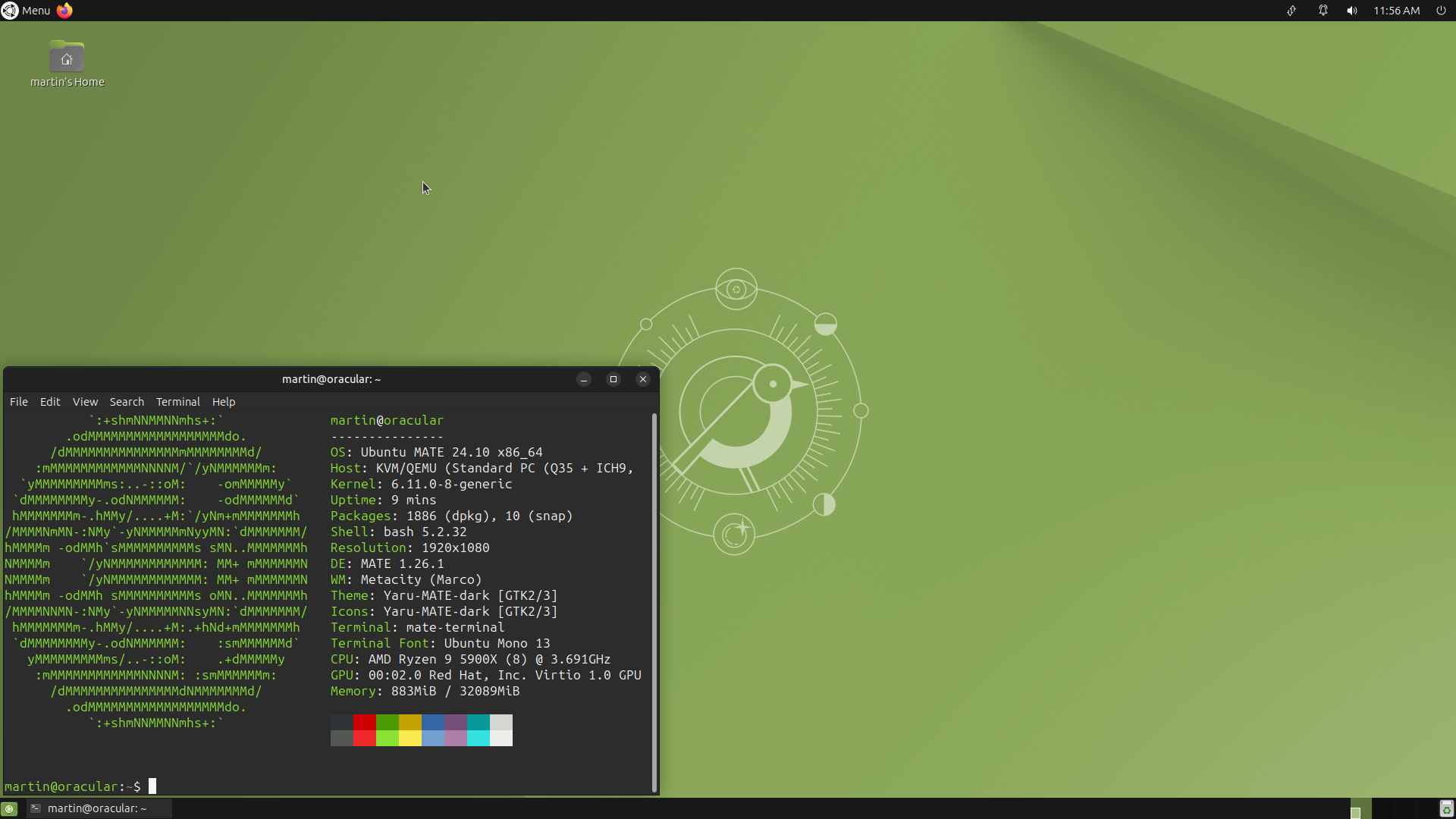

Ubuntu MATE 24.10

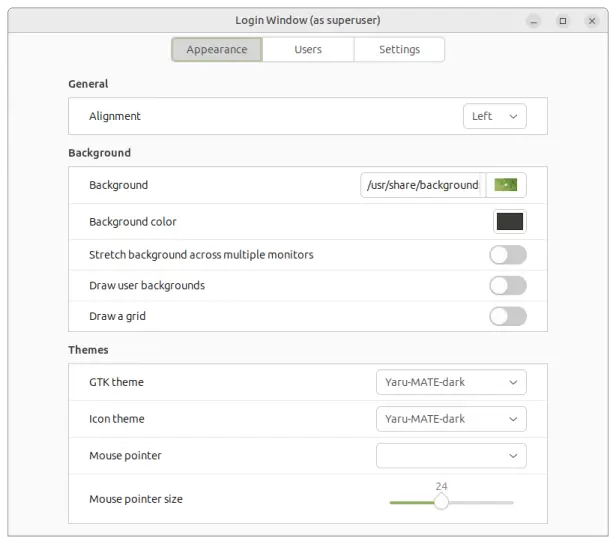

Ubuntu MATE 24.10 Login Window

Login Window